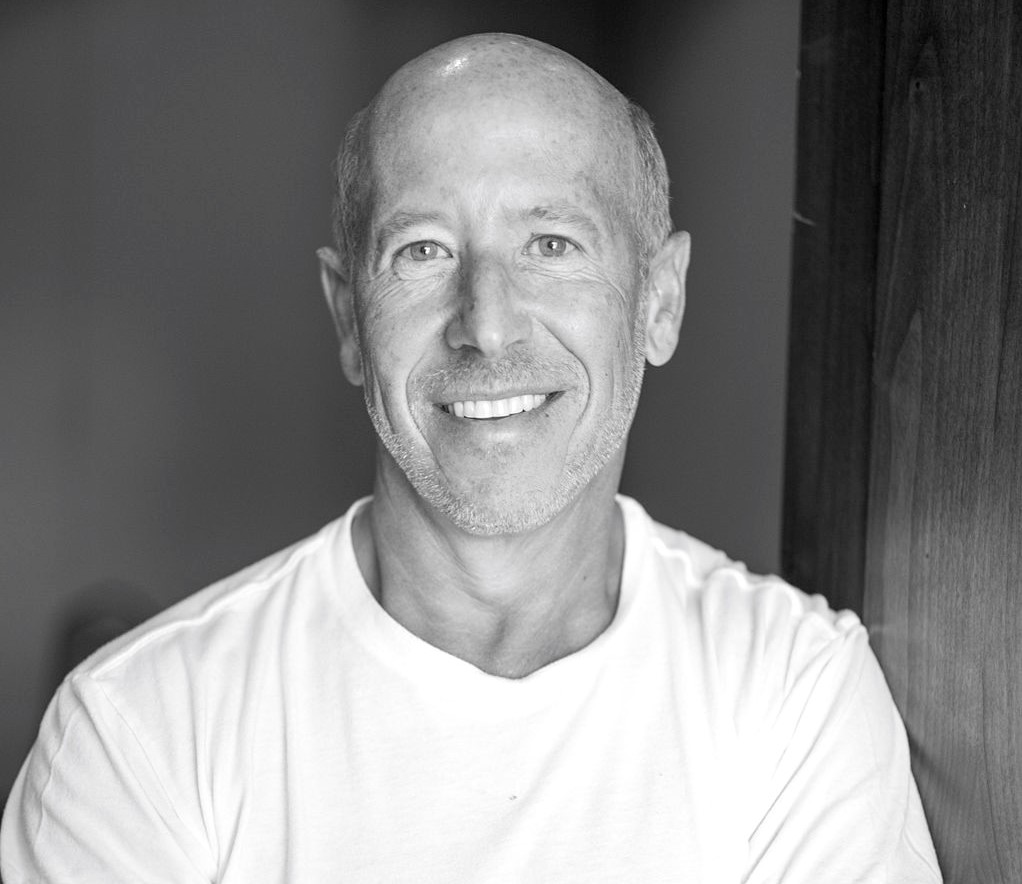

Billionaire real estate investor Barry Sternlicht says Starwood Capital Group is fully prepared to embrace real-world asset (RWA) tokenization but remains sidelined due to regulatory barriers in the United States. Speaking at the World Liberty Forum in Palm Beach, Sternlicht emphasized that his firm, which manages more than $125 billion in assets, is ready to tokenize real estate and other physical holdings using blockchain technology.

“We want to do it right now and we’re ready,” Sternlicht said, expressing frustration that clients cannot yet transact real estate investments through blockchain-based tokens. He described the current regulatory environment as restrictive, preventing broader adoption of digital asset innovation in traditional finance.

Tokenization converts ownership of tangible assets—such as real estate, art, or private equity—into digital tokens recorded on a blockchain. These tokens can be traded more efficiently, potentially increasing liquidity, reducing administrative costs, and expanding investor access. For large asset managers like Starwood Capital, tokenized real estate could open new capital-raising channels while modernizing outdated, paper-heavy systems.

Although blockchain adoption in property markets is still emerging, some companies are already advancing the concept. Propy, for example, announced plans for a $100 million expansion aimed at acquiring mid-sized U.S. title firms to streamline real estate transactions through blockchain solutions.

Industry projections highlight significant growth potential. Deloitte estimates that $4 trillion worth of real estate could be tokenized by 2035, up from less than $300 billion in 2024, representing a compound annual growth rate of 27%. According to the firm, tokenized real estate could create new investment products, improve operational efficiency, lower administrative expenses, and increase retail participation in previously illiquid markets.

Sternlicht called blockchain-based tokenization “the future,” arguing that the technology is superior and still in its early stages compared to artificial intelligence. He described tokenization as transformative and inevitable, saying the world simply needs time—and regulatory clarity—to catch up.

Comment 0